High Bandwidth Memory Market Report by Application & Technology 2025

High Bandwidth Memory Market Size and Forecast 2025–2033

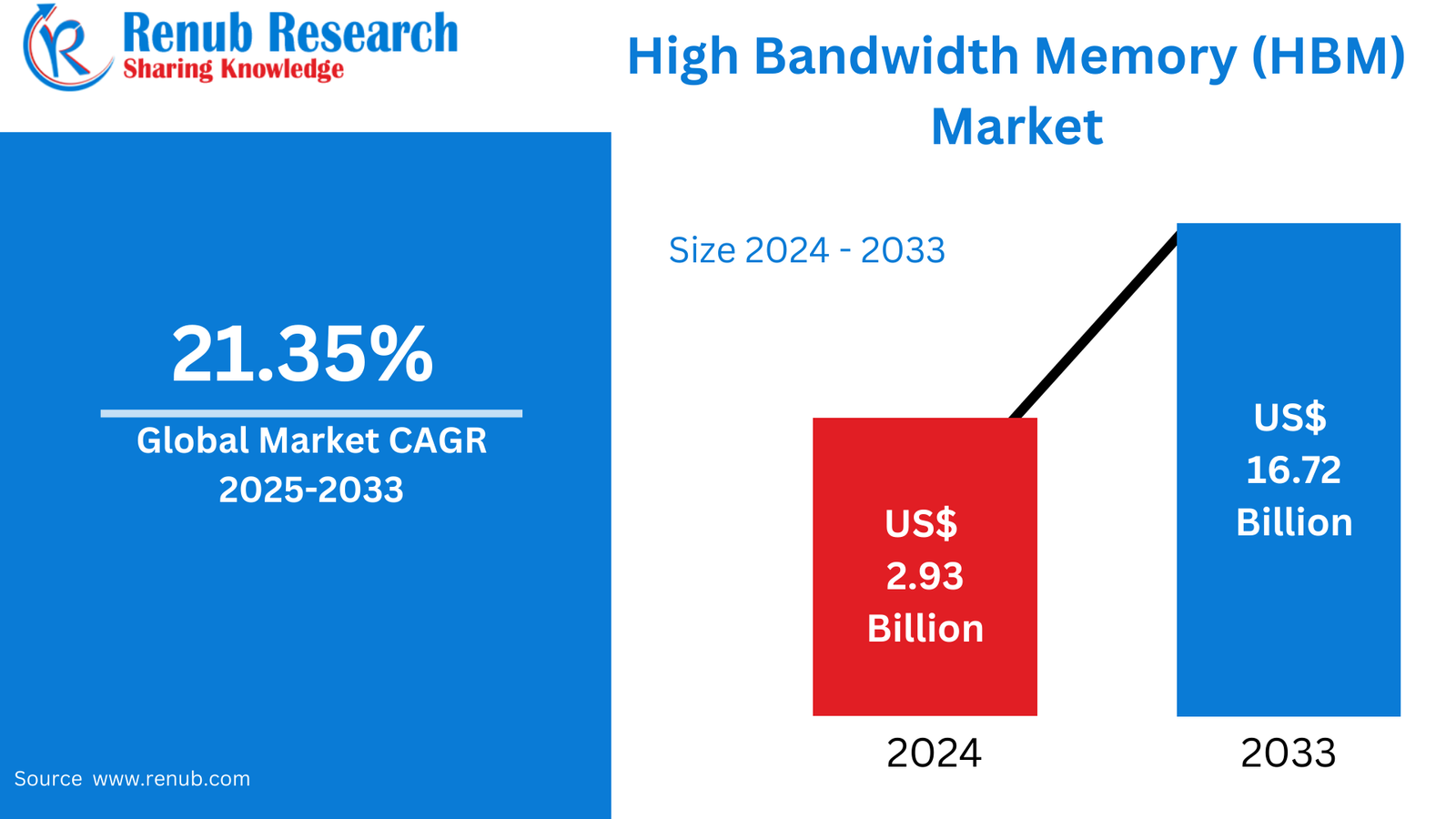

According To Renub Research High Bandwidth Memory (HBM) Market is witnessing exponential growth as computing systems worldwide shift toward data-intensive, high-performance architectures. Valued at US$ 2.93 billion in 2024, the market is forecast to reach US$ 16.72 billion by 2033, expanding at a robust CAGR of 21.35% from 2025 to 2033. This rapid expansion is fueled by accelerating demand across artificial intelligence, machine learning, cloud data centers, high-performance computing (HPC), gaming, and advanced automotive systems.

High bandwidth memory represents a major evolution in memory design. Unlike traditional DRAM, HBM leverages 3D stacking, through-silicon vias (TSVs), and wide I/O interfaces to deliver significantly higher data transfer rates while reducing power consumption and latency. These advantages make HBM indispensable for modern processors that must handle massive data workloads efficiently.

As AI models grow in complexity, cloud infrastructure scales globally, and graphics-heavy applications become mainstream, HBM has transitioned from a niche solution to a strategic component of next-generation computing platforms.

Download Free Sample Report:https://www.renub.com/request-sample-page.php?gturl=high-bandwidth-memory-hbm-market-p.php

High Bandwidth Memory Industry Outlook

The global HBM industry is evolving rapidly as semiconductor manufacturers and system designers respond to increasing computational intensity. Advanced workloads such as deep neural networks, large language models, autonomous driving systems, and real-time analytics require memory architectures capable of sustaining extreme throughput without bottlenecks.

HBM fulfills this requirement by enabling parallel data access across thousands of memory connections, significantly outperforming conventional memory technologies. The integration of HBM with GPUs, AI accelerators, CPUs, and FPGAs has become a defining trend across multiple industries.

Recent advancements in interposer technology, chiplet architectures, and advanced packaging have further strengthened the HBM value proposition. These innovations reduce signal distance, improve thermal efficiency, and enable tighter integration between logic and memory. As a result, HBM adoption continues to accelerate across hyperscale data centers, supercomputing facilities, and edge-AI platforms.

Key Drivers of Growth in the High Bandwidth Memory Market

Rising Demand for Artificial Intelligence and Machine Learning

Artificial intelligence and machine learning workloads require exceptionally high memory bandwidth to process vast datasets in real time. Training large AI models involves continuous data movement between processors and memory, making bandwidth a critical performance factor.

HBM provides the low latency and ultra-high throughput necessary for AI training and inference, enabling faster model convergence and improved accuracy. As AI adoption expands across healthcare, finance, retail, manufacturing, and defense, demand for HBM-enabled processors continues to surge. Chipmakers increasingly design AI accelerators around HBM to maintain competitive performance benchmarks.

Expansion of Cloud Computing and Hyperscale Data Centers

Modern data centers power cloud computing, big data analytics, and AI-as-a-service platforms. These environments require memory solutions that balance performance, scalability, and energy efficiency.

HBM is increasingly deployed in servers used for AI workloads, high-frequency trading, and scientific computing. Its ability to deliver higher bandwidth per watt allows hyperscalers to improve performance density while managing power and cooling constraints. The growth of edge computing further amplifies HBM demand, as compact yet powerful systems require high-performance memory in limited form factors.

Increasing Complexity in Gaming and Graphics Processing

The gaming and graphics industries are major contributors to HBM adoption. Advanced gaming engines, virtual reality, augmented reality, and real-time ray tracing demand memory systems capable of sustaining high frame rates and ultra-high-resolution visuals.

HBM enables GPUs to deliver superior performance while maintaining thermal efficiency. Its compact footprint supports slimmer device designs and improved cooling solutions. As gaming hardware evolves toward higher realism and responsiveness, HBM is becoming a preferred memory technology in premium graphics solutions.

Challenges in the High Bandwidth Memory Market

High Manufacturing and Integration Costs

One of the primary challenges limiting broader HBM adoption is its high production cost. HBM manufacturing involves complex processes such as 3D stacking, TSV formation, and advanced packaging, all of which require specialized equipment and expertise.

Integrating HBM with processors on interposers or advanced substrates adds further complexity and expense. These factors restrict adoption primarily to high-end and mission-critical applications, slowing penetration into cost-sensitive market segments. Reducing manufacturing costs through yield improvements and process optimization remains a key industry objective.

Supply Chain Constraints and Vendor Concentration

The HBM supply chain is highly concentrated, with a limited number of memory manufacturers capable of producing at scale. This concentration increases vulnerability to supply disruptions caused by equipment shortages, geopolitical tensions, or sudden demand spikes.

As AI and cloud computing demand outpaces supply, shortages can delay product launches and increase pricing pressure across the ecosystem. Expanding fabrication capacity, diversifying production locations, and strengthening supplier partnerships are essential to stabilizing long-term market growth.

Technology Analysis

Evolution of HBM Generations

The HBM market is segmented by technology generation, including HBM2, HBM2E, HBM3, HBM3E, and emerging HBM4. Each generation delivers improvements in bandwidth, capacity, and energy efficiency.

HBM3 and HBM3E are currently gaining strong traction due to their suitability for AI accelerators and high-performance GPUs. The introduction of HBM4 further enhances scalability by enabling larger memory stacks and faster data transfer speeds, positioning it as a cornerstone for future AI and exascale computing platforms.

Application-Based Market Analysis

Servers and High-Performance Computing

Servers and HPC systems represent a major application segment for HBM. Scientific simulations, weather modeling, genomic research, and financial analytics all require massive parallel processing capabilities.

HBM allows these systems to operate at peak efficiency by minimizing memory bottlenecks. Governments, research institutions, and enterprises continue to invest heavily in HPC infrastructure, supporting sustained demand for high-bandwidth memory solutions.

Consumer Electronics and Automotive Applications

Beyond data centers, HBM adoption is expanding into consumer electronics and automotive systems. Advanced driver-assistance systems (ADAS), autonomous driving platforms, and in-vehicle AI require real-time data processing with strict latency constraints.

HBM’s high throughput and energy efficiency make it suitable for these safety-critical applications. As vehicles become increasingly software-defined, memory performance will play a decisive role in system reliability and responsiveness.

Regional Market Analysis

North America High Bandwidth Memory Market

North America remains the largest regional market, driven by leadership in AI innovation, cloud computing, and semiconductor design. The region benefits from strong R&D ecosystems, advanced fabrication facilities, and close collaboration between technology companies and research institutions.

Continued investment in AI infrastructure and defense computing is expected to sustain long-term HBM demand.

Europe High Bandwidth Memory Market

Europe’s HBM market growth is supported by industrial automation, automotive engineering, and scientific research. Countries with strong manufacturing bases and HPC initiatives are increasingly integrating high-bandwidth memory into advanced computing systems to enhance efficiency and energy performance.

Asia-Pacific High Bandwidth Memory Market

Asia-Pacific is both a major production hub and a rapidly growing consumer of HBM. Investments in semiconductor self-reliance, data centers, and AI development are accelerating regional demand. The presence of advanced memory fabrication capabilities strengthens the region’s strategic importance in the global HBM supply chain.

Middle East & Africa High Bandwidth Memory Market

The Middle East, particularly Saudi Arabia, is emerging as a promising market due to national digital transformation initiatives. Investments in smart cities, AI infrastructure, and cloud services are creating new demand for high-performance computing and memory technologies.

Recent Developments in the High Bandwidth Memory Industry

Recent industry developments highlight the accelerating pace of innovation in HBM technology. Leading semiconductor companies have introduced next-generation HBM solutions with higher capacities and faster speeds to support AI-optimized processors.

Standardization efforts continue to advance, enabling greater interoperability and scalability across computing platforms. These developments are expected to further solidify HBM’s role as a foundational technology for next-generation computing.

Market Segmentation

By Application

· Servers

· Networking

· High-Performance Computing

· Consumer Electronics

· Automotive and Transportation

By Technology

· HBM2

· HBM2E

· HBM3

· HBM3E

· HBM4

By Memory Capacity per Stack

· 4 GB

· 8 GB

· 16 GB

· 24 GB

· 32 GB and Above

By Processor Interface

· GPU

· CPU

· AI Accelerator / ASIC

· FPGA

· Others

By Region

· North America

· Europe

· Asia Pacific

· Latin America

· Middle East & Africa

Competitive Landscape and Key Players

The global HBM market is highly competitive and innovation-driven. Major participants focus on expanding manufacturing capacity, improving yield rates, and developing advanced packaging technologies. Strategic partnerships with processor manufacturers and cloud service providers are central to maintaining market leadership.

Key companies operating in the high bandwidth memory ecosystem include Samsung Electronics Co., Ltd., SK hynix Inc., Micron Technology, Inc., Intel Corporation, Advanced Micro Devices, Inc., Nvidia Corporation, Amkor Technology, Inc., and Powertech Technology Inc..

Each company is evaluated across strategic dimensions including product portfolio, leadership, recent developments, SWOT analysis, and revenue performance.

Conclusion

The High Bandwidth Memory Market is set for transformative growth through 2033, driven by accelerating AI adoption, expanding cloud infrastructure, and rising demand for high-performance computing. While challenges related to cost and supply chain constraints remain, continuous technological innovation and strategic investments are expected to overcome these barriers.

As digital ecosystems become increasingly data-intensive, HBM will remain a critical enabler of faster, smarter, and more energy-efficient computing systems worldwide.